Decoding the Significance of LLM Chains in LLMOps

Everything about Chaining in LLMs

Table of Contents

I. LLM Chains

II. What is exactly Chaining in LLMOps and is it essential?

1. The Basics of Chaining

2. Core Chains in LLMOps

2.1. Prompt Chain

2.2. Tools Chain

2.3. Data Indexing Chain

2.4. Best Practices for Core Chains

III. Lang Chain: The Glue that Connects

💡I write about Machine Learning on Medium || Github || Kaggle || Linkedin. 🔔 Follow “Nhi Yen” for future updates!

You may have come across terms like OpenAI, LLM, LLM Chain, LangChain, Llama Index, etc., but this is causing a bit of confusion. Let’s try to simplify things by understanding the basics of LLM and LLM Chains, and specifically, what chaining is.

I. LLM Chains

Using OpenAI models as an example, there are two distinct approaches to interacting with language models: Direct LLM Interface and the LLMChain Interface. Let’s look at the characteristics of each and decide which one to choose.

1. Direct LLM Interface: Simplifying Ad-Hoc Tasks

Essentially, LLM is a foundational class for interacting with language models. LLM streamlines the process by handling important low-level tasks such as rapid tokenization, API calls, and error handling.

Let’s illustrate this simplicity with a snippet:

llm = OpenAI(temperature=0.9)

if prompt:

response = llm("Who is Donald Trump?")

st.write(response)

LLM is ideal for basic language processing tasks because you can pass messages and start interacting with language models in just a few lines. By using instances of OpenAI classes directly, users can enter prompts with a variety of structures, making them suitable for flexible or ad hoc tasks. This model generates responses without requiring a predefined request format.

👉 The article below is a showcase of using Direct LLM Interface. In the project, I used OpenAI GPT-3.5 Turbo model for natural language processing and comet_llm to keep track of what users ask, how the bot responds, and how long each interaction takes.

👉 Check out Comet in action by looking through my previous hands-on projects:

- Enhancing Customer Churn Prediction with Continuous Experiment Tracking

- Hyperparameter Tuning in Machine Learning: A Key to Optimize Model Performance

- The Magic of Model Stacking: Boosting Machine Learning Performance

- Logging — The Effective Management of Machine Learning Systems

2. LLMChain Interface: Level up the Game with Additional Logic

Now let’s use LLMChain to level up. LLMChain is a sophisticated chain that encapsulates LLM and enhances its functionality with additional features. Think about message formatting, input/output analysis, and conversation management. LLMChain covers everything.

The snippet below showcases its prowess:

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

template = "Write me something about {topic}"

topic_template = PromptTemplate(input_variables=['topic'], template=template)

topic_chain = LLMChain(llm=llm, prompt=topic_template)

if prompt:

response = topic_chain.run(question)

st.write(response)

LLMChain is like a very smart tool that works in conjunction with LLM to facilitate complex language tasks. In the example above, using LLMChain with the PromptTemplate class adds an extra layer to keep things organized, especially for structured tasks. This method is best when you want a consistent format for your prompts. PromptTemplate allows you to define a structured message with specific details, and LLMChain uses this template to generate a response using the underlying model.

Choose LLMChain if you have a task that requires a specific request format. In addition to PromptTemplate, LLMChain provides additional functionality and allows you to enhance your tasks with features such as pre-processing and post-processing steps. I will explain it in detail in the next paragraphs in this article.

II. What is exactly Chaining in LLMOps and is it essential?

1. The Basics of Chaining

Chains are the secret sauce that transforms ordinary LLM interactions into powerful applications. When using LLMs such as ChatGPT, most people stick to a simple question and answer scenario. But to take things to the next level, developers are turning to chains, the process of connecting different components to enhance the functionality of an LLM.

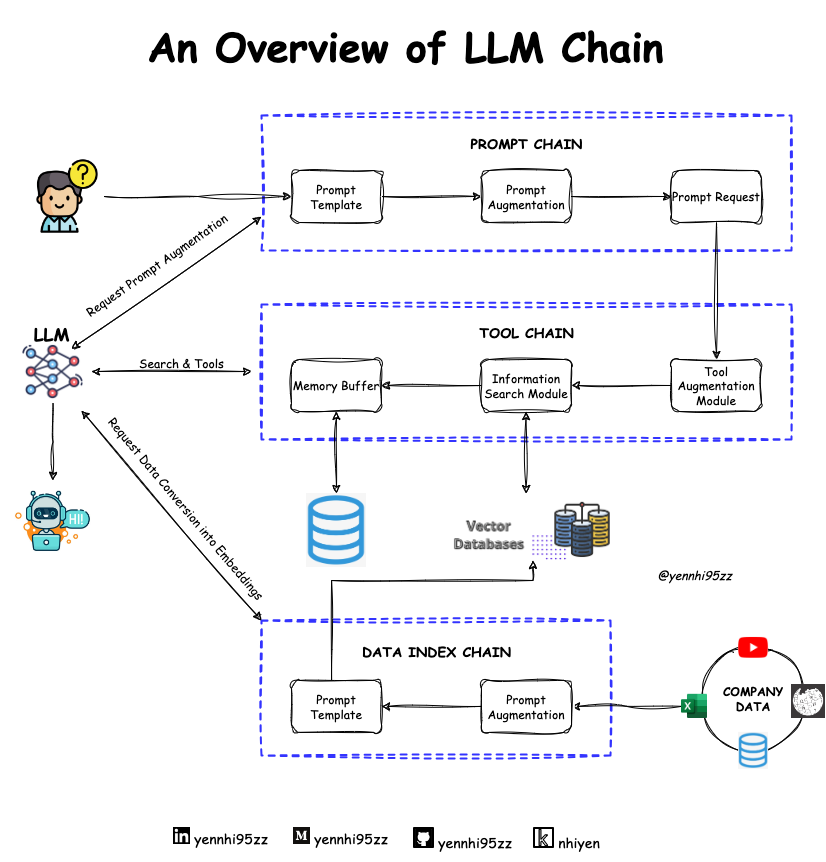

2. Core Chains in LLMChain

In LLMChain, 03 core chains form the backbone of the entire process: prompt chain, tools chain, and data indexing chain. These chains work together seamlessly to create a matrix-like structure that allows developers to connect and power different components.

2.1. Prompt Chain

Prompt chains are the starting point for LLMOps, where a developer creates specific instructions or prompts for LLM. It guides the language model to understand the desired user interaction and sets the context for subsequent chains. Creating effective prompts is critical to getting accurate and appropriate responses from your LLM.

👉 I also mentioned about “Guidelines for Developers on Prompting” in the article “Create a Simple E-commerce Chatbot Using OpenAI + CometLLM.” It’s useful for getting a deeper understanding of the Prompt Chain.

2.2. Tool Chain

The Tool Chain includes various development tools that enhance the functionality of LLM.

Components:

- Extractive Tools: Used to extract specific information from the LLM’s responses.

- Transformative Tools: Responsible for transforming and refining the output generated by LLMs.

- Utility Tools: Additional tools that add functionalities like formatting, summarization, etc.

- Integration: Tools are integrated to ensure that the output from LLMs aligns with the desired application requirements.

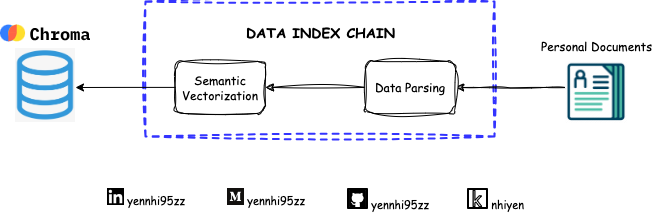

2.3. Data Indexing Chain

The data indexing chain is the backbone for efficiently storing and retrieving information from documents.

Process:

- Chunking: Documents are split into manageable chunks for efficient processing.

- Embeddings: These chunks are converted into embeddings, which are numerical representations suitable for storage in vector databases.

- Vector Database: Specialized databases such as Pinecone, Chroma Database, Weaviate, FAISS, Milvus, Qrant, and Elasticsearch store these embeddings for fast and effective retrieval. These databases store document embeddings in a way that allows for fast and efficient retrieval. Although it may seem complicated, it is essentially a specialized database that is optimized to handle large amounts of data.

Role:

- You can search large amounts of data quickly, contributing to the efficiency of the LLMOps applications.

2.4. Best Practices for Core Chains

- Balanced Prompts: Creating prompts that balance specificity and generality will help LLMs provide useful and diverse responses.

- Tool Selection: Selecting and integrating tools that complement the required functionality of your application is critical to a smooth LLMOps workflow.

- Optimized indexing: Tuning the data indexing process for efficient storage and retrieval can significantly improve application performance.

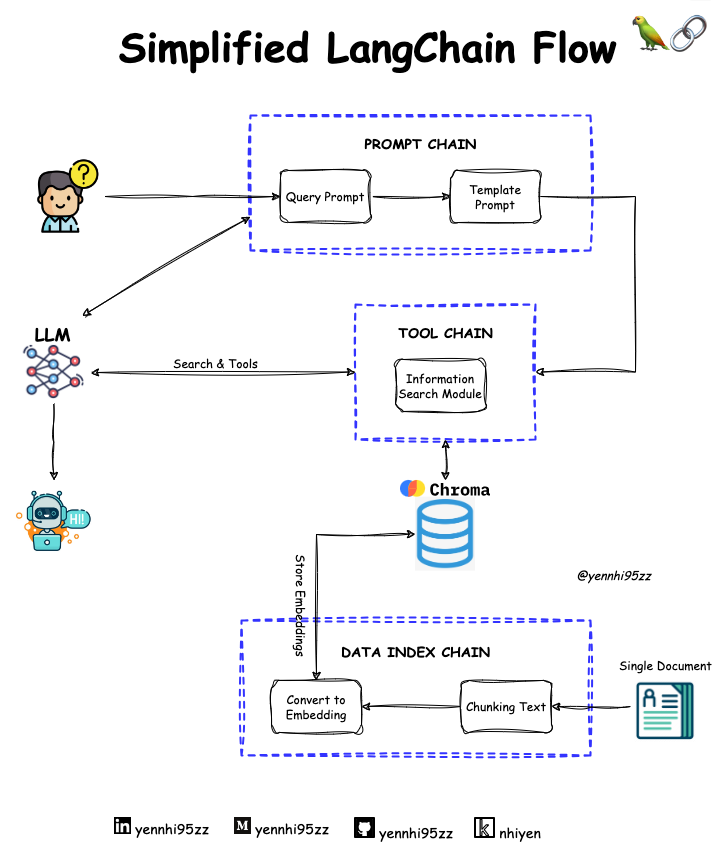

III. LangChain: The Glue that Connects

I believe you all have heard of LangChain. It is an innovative tool for LLMOps that serves as a general-purpose interface for interacting with LLM. This acts as a connector, allowing developers to combine LLMs with other tools and databases. The key idea behind Lang Chain is to go beyond simple question-and-answer interactions and create applications that leverage the full potential of LLM.

LLMOps seamlessly integrates LLM, vector databases, and other tools around Lang Chain. The chaining process involves using a developer tool stack to connect models, databases, etc. to ultimately create a powerful LLM application.

Let’s take a look at a simplified version of LangChain below.

In addition to LangChain, the following important Chain players should be kept in mind:

- LangChain: Raised approximately $10 million in seed funding.

- Llama Index: A remarkable open source tool.

- HayStack: Received approximately $9.2 million in seed funding and debt financing.

- AgentGPT : Level AI’s open source project, backed by a generous $20 million Series B funding in 2022, focused on call center applications.

👉 You might be interested in “LangChain Conversation Memory Types” in another article.

Conclusion

To wrap it up, Chaining is the secret sauce to make LLM super powerful in everyday uses. LangChain is the top pick for developers wanting to make the most out of LLMOps, thanks to its flexible interface and easy connections. Digging deep into Chaining and LLM Developer tools can set you apart in job searches. Keep following for more insights and learning opportunities. Cheers!

#LLM #LLMChain #LLMOps #ChainingDecoded #DifferentiateLLM